Cooperative Aerial Manipulations of Cable-Suepended Loads

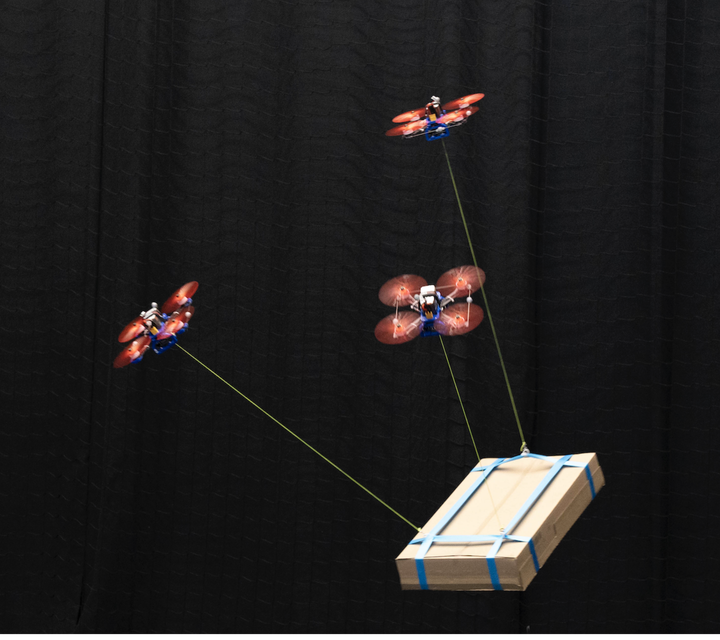

Three drones collaboratively carrying a cable-suspended load

Three drones collaboratively carrying a cable-suspended load

Drones have limited load capacities, and developing heavy-lifting drones is costly. Using multiple drones to collaboratively lift a load offers theoretically unlimited load capacities, yet it offers substantial challenges in coordination, planning, estimation, and control. This project aims at algorithmic development to enable agile, robust, and decentralized collaboration to enable collaborative aerial manipulation of a cable-suspended load.

First of all, we have developed a centralized algorithm, recently published in Science Robotics. It considers the quadrotors-cables-load system as a whole, and solves the kinodynamic motion planning problem online to generate receding horizon reference trajectories for all quadrotors. The trajectories are subsequently followed by onboard INDI (incremental nonlinear dynamic inversion) controllers to compensate for cable tensions and other external disturbances. Notably, no additional sensors are required to be mounted on the load; instead, the load poses are estimated through quadrotor state estimates. Here is the link to the paper’s project page.

A recent atempt is exploring the potential of the centralized method in construction domain. Here we use four quadrotors (each weighing 0.6 kg) to cooperatively control the orientation and position of a large-span space frame (2.6 m × 0.5 m × 1.0 m) with a total mass of 3.0 kg. The frame is made of plastic—originally a DIY fort toy for kids—but it serves as a lightweight stand-in for structural assemblies. An interesting question is how to scale up the system to even larger structures with heavier weights.

We’ve further explored two paths towards decentralized cooperative aerial manipulation. Decentralized means that the algorithms are executed fully onboard each quadrotor, without a centralized coordinator.

A first attempt was recently accepted by IEEE MRS 2025. This work, entitled “Decentralized Real-Time Planning for Multi-UAV Cooperative Manipulation via Imitation Learning” ( Link to the paper), leverages imitation learning to train student policies for each quadrotor (with only partial observability, namely states of each quadrotor itself) by learning the centralized teacher policy (the abovementioned centralized planner). The same as the teacher, the student policy also generates receding horizon reference trajectories for each quadrotor. To ensure smoothness, we leverage Physics-informed neural networks (PINNs) as the architecture of the student policy. The training leverages the DAgger (Dataset Aggregation) algorithm. This approach has shown decent trajectory tracking performance and even generalizability beyond the training trajectories.

A second attempt was recently presented at CoRL 2025, entitled “Decentralized Aerial Manipulation of a Cable-Suspended Load Using Multi-Agent Reinforcement Learning” ( Link to the project). In this work, we explored the capability of multi-agent reinforcement learning (MA-PPO). We trained the PPO in the simulation environment with a centralized critic and decentralized actors. The policy is deployed onboard each quadrotor, taking its own states and the load states as observations. The action space is selected as accelerations and angular rates, then executed by onboard INDI controllers. In addition to similar setpoint tracking performance compared to the centralized method, it also “emerges” fault-tolerance. When one quadrotor fails in flight, the remaining quadrotors can still control the remaining controllable DoFs of the load.